#LlamaIndex Frameworks

Explore tagged Tumblr posts

Text

Explore the inner workings of LlamaIndex, enhancing LLMs for streamlined natural language processing, boosting performance and efficiency.

#Large Language Model Meta AI#Power of LLMs#Custom Data Integration#Expertise in Machine Learning#Expertise in Prompt Engineering#LlamaIndex Frameworks#LLM Applications

0 notes

Text

Explore the inner workings of LlamaIndex, enhancing LLMs for streamlined natural language processing, boosting performance and efficiency.

#Large Language Model Meta AI#Power of LLMs#Custom Data Integration#Expertise in Machine Learning#Expertise in Prompt Engineering#LlamaIndex Frameworks#LLM Applications

0 notes

Text

Conversational AI Technical Lead

Job title: Conversational AI Technical Lead Company: Qualcomm Job description: Technology Group IT Programmer Analyst General Summary: Qualcomm IT is seeking a Lead Conversational AI Developer… and Frameworks such as LangChain, LlamaIndex, and Streamlit. Knowledge and implementation experience of chatbot technologies using… Expected salary: Location: Hyderabad, Telangana Job date: Fri, 25 Apr…

0 notes

Text

Multimodal AI Pipelines: Building Scalable, Agentic, and Generative Systems for the Enterprise

Introduction

Today’s most advanced AI systems must interpret and integrate diverse data types—text, images, audio, and video—to deliver context-aware, intelligent responses. Multimodal AI, once an academic pursuit, is now a cornerstone of enterprise-scale AI pipelines, enabling businesses to deploy autonomous, agentic, and generative AI at unprecedented scale. As organizations seek to harness these capabilities, they face a complex landscape of technical, operational, and ethical challenges. This article distills the latest research, real-world case studies, and practical insights to guide AI practitioners, software architects, and technology leaders in building and scaling robust, multimodal AI pipelines.

For those interested in developing skills in this area, a Agentic AI course can provide foundational knowledge on autonomous decision-making systems. Additionally, Generative AI training is crucial for understanding how to create new content with AI models. Building agentic RAG systems step-by-step requires a deep understanding of both agentic and generative AI principles.

The Evolution of Agentic and Generative AI in Software Engineering

Over the past decade, AI in software engineering has evolved from rule-based, single-modality systems to sophisticated, multimodal architectures. Early AI applications focused narrowly on tasks like text classification or image recognition. The advent of deep learning and transformer architectures unlocked new possibilities, but it was the emergence of agentic and generative AI that truly redefined the field.

Agentic AI refers to systems capable of autonomous decision-making and action. These systems can reason, plan, and interact dynamically with users and environments. Generative AI, exemplified by models like GPT-4, Gemini, and Llama, goes beyond prediction to create new content, answer complex queries, and simulate human-like interaction. A comprehensive Agentic AI course can help developers understand how to design and implement these systems effectively.

The integration of multimodal capabilities—processing text, images, and audio simultaneously—has amplified the potential of these systems. Applications now range from intelligent assistants and content creation tools to autonomous agents that navigate complex, real-world scenarios. Generative AI training is essential for developing models that can generate new content across different modalities. To build agentic RAG systems step-by-step, developers must master the integration of retrieval and generation capabilities, ensuring that systems can both retrieve relevant information and generate coherent responses.

Key Frameworks, Tools, and Deployment Strategies

The rapid evolution of multimodal AI has been accompanied by a proliferation of frameworks and tools designed to streamline development and deployment:

LLM Orchestration: Modern AI pipelines increasingly rely on the orchestration of multiple large language models (LLMs) and specialized models (e.g., vision transformers, audio encoders). Tools like LangChain, LlamaIndex, and Hugging Face Transformers enable seamless integration and chaining of models, allowing developers to build complex, multimodal workflows with relative ease. This process is fundamental in Generative AI training, as it allows for the creation of diverse and complex AI models.

Autonomous Agents: Frameworks such as AutoGPT and BabyAGI provide blueprints for creating agentic systems that can autonomously plan, execute, and adapt based on multimodal inputs. These agents are increasingly deployed in customer service, content moderation, and decision support roles. An Agentic AI course would cover the design principles of such autonomous systems.

MLOps for Generative Models: Operationalizing generative and multimodal AI requires robust MLOps practices. Platforms like Galileo AI offer advanced monitoring, evaluation, and debugging capabilities for multimodal pipelines, ensuring reliability and performance at scale. This is crucial for maintaining the integrity of agentic RAG systems.

Multimodal Processing Pipelines: The typical pipeline for multimodal AI involves data collection, preprocessing, feature extraction, fusion, model training, and evaluation. Each step presents unique challenges, from ensuring data quality and alignment across modalities to managing the computational demands of large-scale training. Generative AI training focuses on optimizing these pipelines for content generation tasks.

Vector Database Management: Emerging tools like DataVolo and Milvus provide scalable, secure, and high-performance solutions for managing unstructured data and embeddings, which are critical for efficient retrieval and processing in multimodal systems. This is essential for building agentic RAG systems step-by-step, as it enables efficient data management.

Software Engineering Best Practices for Multimodal AI

Building and scaling multimodal AI pipelines demands more than cutting-edge models—it requires a holistic approach to system design and deployment. Key software engineering best practices include:

Version Control and Reproducibility: Every component of the AI pipeline should be versioned and reproducible, enabling effective debugging, auditing, and compliance. This is particularly important when integrating agentic AI and generative AI components.

Automated Testing: Comprehensive test suites for data validation, model behavior, and integration points help catch issues early and reduce deployment risks. Generative AI training emphasizes the importance of testing generated content for coherence and relevance.

Security and Compliance: Protecting sensitive data—especially in multimodal systems that process images or audio—requires robust encryption, access controls, and compliance with regulations such as GDPR and HIPAA. This is a critical aspect of building agentic RAG systems step-by-step, ensuring that systems are secure and compliant.

Documentation and Knowledge Sharing: Clear, up-to-date documentation and collaborative tools (e.g., Confluence, Notion) enable cross-functional teams to work efficiently and maintain system integrity over time. An Agentic AI course would highlight the importance of documentation in complex AI systems.

Advanced Tactics for Scalable, Reliable AI Systems

Scaling autonomous, multimodal AI pipelines requires advanced tactics and innovative approaches:

Modular Architecture: Designing systems with modular, interchangeable components allows teams to update or replace individual models without disrupting the entire pipeline. This is especially critical for multimodal systems, where new modalities or improved models may be introduced over time. Generative AI training emphasizes modularity to facilitate updates and scalability.

Feature Fusion Strategies: Effective integration of features from different modalities is a key challenge. Techniques such as early fusion (combining raw data), late fusion (combining model outputs), and cross-modal attention mechanisms are used to improve performance and robustness. Building agentic RAG systems step-by-step involves mastering these fusion strategies.

Transfer Learning and Pretraining: Leveraging pretrained models (e.g., CLIP for vision-language tasks, ViT for image processing) accelerates development and improves generalization across modalities. This is a common practice in Generative AI training to enhance model performance.

Scalable Infrastructure: Deploying multimodal AI at scale requires robust infrastructure, including distributed training frameworks (e.g., PyTorch Lightning, TensorFlow Distributed) and efficient inference engines (e.g., ONNX Runtime, Triton Inference Server). An Agentic AI course would cover the design of scalable infrastructure for autonomous systems.

Continuous Monitoring and Feedback Loops: Real-time monitoring of model performance, data drift, and user feedback is essential for maintaining reliability and iterating quickly. This is crucial for building agentic RAG systems step-by-step, ensuring continuous improvement.

Ethical and Regulatory Considerations

As multimodal AI systems become more pervasive, ethical and regulatory considerations grow in importance:

Bias Mitigation: Ensuring that models are trained on diverse, representative datasets and regularly audited for bias. This is a critical aspect of Generative AI training, as biased models can generate inappropriate content.

Privacy and Data Protection: Implementing robust data governance practices to protect user privacy and comply with global regulations. An Agentic AI course would emphasize the importance of ethical considerations in AI system design.

Transparency and Explainability: Providing clear explanations of model decisions and maintaining audit trails for accountability. This is essential for building agentic RAG systems step-by-step, ensuring transparency and trust in AI decisions.

Cross-Functional Collaboration for AI Success

Building and scaling multimodal AI pipelines is inherently interdisciplinary. It requires close collaboration between data scientists, software engineers, product managers, and business stakeholders. Key aspects of successful collaboration include:

Shared Goals and Metrics: Aligning on business objectives and key performance indicators (KPIs) ensures that technical decisions are driven by real-world value. Generative AI training emphasizes the importance of collaboration to ensure that AI systems meet business needs.

Agile Development Practices: Regular standups, sprint planning, and retrospective meetings foster transparency and rapid iteration. An Agentic AI course would cover agile methodologies for developing complex AI systems.

Domain Expertise Integration: Involving domain experts ensures that models are contextually relevant and ethically sound. This is crucial for building agentic RAG systems step-by-step, ensuring that AI systems are relevant and effective.

Feedback Loops: Establishing channels for continuous feedback from end-users and stakeholders helps teams identify issues early and prioritize improvements. This is essential for Generative AI training, as feedback loops help refine generated content.

Measuring Success: Analytics and Monitoring

The true measure of an AI pipeline’s success lies in its ability to deliver consistent, high-quality results at scale. Key metrics and practices include:

Model Performance Metrics: Accuracy, precision, recall, and F1 scores for classification tasks; BLEU, ROUGE, or METEOR for generative tasks. Generative AI training focuses on optimizing these metrics for content generation tasks.

Operational Metrics: Latency, throughput, and resource utilization are critical for ensuring that systems can handle production workloads. An Agentic AI course would cover the importance of monitoring operational metrics for autonomous systems.

User Experience Metrics: User satisfaction, engagement, and task completion rates provide insights into the real-world impact of AI deployments. Building agentic RAG systems step-by-step involves monitoring user experience metrics to ensure that systems meet user needs.

Monitoring and Alerting: Real-time dashboards and automated alerts help teams detect and respond to issues promptly, minimizing downtime and maintaining trust. This is crucial for Generative AI training, as continuous monitoring ensures that AI systems remain reliable and efficient.

Case Study: Meta’s Multimodal AI Journey

Meta’s recent launch of the Llama 4 family, including the natively multimodal Llama 4 Scout and Llama 4 Maverick models, offers a compelling case study in the evolution and deployment of agentic, generative AI at scale. This case study highlights the importance of Generative AI training in developing models that can process and generate content across multiple modalities.

Background and Motivation

Meta recognized early on that the future of AI lies in the seamless integration of multiple modalities. Traditional LLMs, while powerful, were limited by their focus on text. To deliver more immersive, context-aware experiences, Meta set out to build models that could process and reason across text, images, and audio. Building agentic RAG systems step-by-step requires a similar approach, integrating retrieval and generation capabilities to create robust AI systems.

Technical Challenges

The development of the Llama 4 models presented several technical hurdles:

Data Alignment: Ensuring that data from different modalities (e.g., text captions and corresponding images) were accurately aligned during training. This challenge is common in Generative AI training, where data quality is crucial for model performance.

Computational Complexity: Training multimodal models at scale required significant computational resources and innovative optimization techniques. An Agentic AI course would cover strategies for managing computational complexity in autonomous systems.

Pipeline Orchestration: Integrating multiple specialized models (e.g., vision transformers, audio encoders) into a cohesive pipeline demanded robust software engineering practices. This is essential for building agentic RAG systems step-by-step, ensuring that systems are scalable and efficient.

Actionable Tips and Lessons Learned

Based on the experiences of Meta and other leading organizations, here are practical tips and lessons for AI teams embarking on the journey to scale multimodal, autonomous AI pipelines:

Start with a Clear Use Case: Identify a specific business problem that can benefit from multimodal AI, and focus on delivering value early. Generative AI training emphasizes the importance of clear use cases for AI development.

Invest in Data Quality: High-quality, well-aligned data is the foundation of successful multimodal systems. Invest in robust data collection, cleaning, and annotation processes. An Agentic AI course would highlight the importance of data quality for autonomous systems.

Embrace Modularity: Design systems with modular, interchangeable components to facilitate updates and scalability. This is crucial for building agentic RAG systems step-by-step, allowing for easy updates and maintenance.

Leverage Pretrained Models: Use pretrained models for each modality to accelerate development and improve performance. Generative AI training often relies on pretrained models to enhance model capabilities.

Monitor Continuously: Implement real-time monitoring and feedback loops to detect issues early and iterate quickly. This is essential for Generative AI training, ensuring that AI systems remain reliable and efficient.

Foster Cross-Functional Collaboration: Involve stakeholders from across the organization to ensure that technical decisions are aligned with business goals. An Agentic AI course would emphasize the importance of collaboration in AI development.

Prioritize Security and Compliance: Protect sensitive data and ensure that systems comply with relevant regulations. This is critical for building agentic RAG systems step-by-step, ensuring that systems are secure and compliant.

Iterate and Learn: Treat each deployment as a learning opportunity, and use feedback to drive continuous improvement. Generative AI training emphasizes the importance of iteration and learning in AI development.

Conclusion

Building scalable multimodal AI pipelines is one of the most exciting and challenging frontiers in artificial intelligence today. By leveraging the latest frameworks, tools, and deployment strategies—and applying software engineering best practices—teams can build systems that are not only powerful but also reliable, secure, and aligned with business objectives. The journey is complex, but the rewards are substantial: richer user experiences, new revenue streams, and a competitive edge in an increasingly AI-driven world. For AI practitioners, software architects, and technology leaders, the message is clear: embrace the challenge, invest in collaboration and continuous learning, and lead the way in the multimodal AI revolution.

0 notes

Text

Generative AI Platform Development Explained: Architecture, Frameworks, and Use Cases That Matter in 2025

The rise of generative AI is no longer confined to experimental labs or tech demos—it’s transforming how businesses automate tasks, create content, and serve customers at scale. In 2025, companies are not just adopting generative AI tools—they’re building custom generative AI platforms that are tailored to their workflows, data, and industry needs.

This blog dives into the architecture, leading frameworks, and powerful use cases of generative AI platform development in 2025. Whether you're a CTO, AI engineer, or digital transformation strategist, this is your comprehensive guide to making sense of this booming space.

Why Generative AI Platform Development Matters Today

Generative AI has matured from narrow use cases (like text or image generation) to enterprise-grade platforms capable of handling complex workflows. Here’s why organizations are investing in custom platform development:

Data ownership and compliance: Public APIs like ChatGPT don’t offer the privacy guarantees many businesses need.

Domain-specific intelligence: Off-the-shelf models often lack nuance for healthcare, finance, law, etc.

Workflow integration: Businesses want AI to plug into their existing tools—CRMs, ERPs, ticketing systems—not operate in isolation.

Customization and control: A platform allows fine-tuning, governance, and feature expansion over time.

Core Architecture of a Generative AI Platform

A generative AI platform is more than just a language model with a UI. It’s a modular system with several architectural layers working in sync. Here’s a breakdown of the typical architecture:

1. Foundation Model Layer

This is the brain of the system, typically built on:

LLMs (e.g., GPT-4, Claude, Mistral, LLaMA 3)

Multimodal models (for image, text, audio, or code generation)

You can:

Use open-source models

Fine-tune foundation models

Integrate multiple models via a routing system

2. Retrieval-Augmented Generation (RAG) Layer

This layer allows dynamic grounding of the model in your enterprise data using:

Vector databases (e.g., Pinecone, Weaviate, FAISS)

Embeddings for semantic search

Document pipelines (PDFs, SQL, APIs)

RAG ensures that generative outputs are factual, current, and contextual.

3. Orchestration & Agent Layer

In 2025, most platforms include AI agents to perform tasks:

Execute multi-step logic

Query APIs

Take user actions (e.g., book, update, generate report)

Frameworks like LangChain, LlamaIndex, and CrewAI are widely used.

4. Data & Prompt Engineering Layer

The control center for:

Prompt templates

Tool calling

Memory persistence

Feedback loops for fine-tuning

5. Security & Governance Layer

Enterprise-grade platforms include:

Role-based access

Prompt logging

Data redaction and PII masking

Human-in-the-loop moderation

6. UI/UX & API Layer

This exposes the platform to users via:

Chat interfaces (Slack, Teams, Web apps)

APIs for integration with internal tools

Dashboards for admin controls

Popular Frameworks Used in 2025

Here's a quick overview of frameworks dominating generative AI platform development today: FrameworkPurposeWhy It MattersLangChainAgent orchestration & tool useDominant for building AI workflowsLlamaIndexIndexing + RAGPowerful for knowledge-based appsRay + HuggingFaceScalable model servingProduction-ready deploymentsFastAPIAPI backend for GenAI appsLightweight and easy to scalePinecone / WeaviateVector DBsCore for context-aware outputsOpenAI Function Calling / ToolsTool use & plugin-like behaviorPlug-in capabilities without agentsGuardrails.ai / Rebuff.aiOutput validationFor safe and filtered responses

Most Impactful Use Cases of Generative AI Platforms in 2025

Custom generative AI platforms are now being deployed across virtually every sector. Below are some of the most impactful applications:

1. AI Customer Support Assistants

Auto-resolve 70% of tickets with contextual data from CRM, knowledge base

Integrate with Zendesk, Freshdesk, Intercom

Use RAG to pull product info dynamically

2. AI Content Engines for Marketing Teams

Generate email campaigns, ad copy, and product descriptions

Align with tone, brand voice, and regional nuances

Automate A/B testing and SEO optimization

3. AI Coding Assistants for Developer Teams

Context-aware suggestions from internal codebase

Documentation generation, test script creation

Debugging assistant with natural language inputs

4. AI Financial Analysts for Enterprise

Generate earnings summaries, budget predictions

Parse and summarize internal spreadsheets

Draft financial reports with integrated charts

5. Legal Document Intelligence

Draft NDAs, contracts based on templates

Highlight risk clauses

Translate legal jargon to plain language

6. Enterprise Knowledge Assistants

Index all internal documents, chat logs, SOPs

Let employees query processes instantly

Enforce role-based visibility

Challenges in Generative AI Platform Development

Despite the promise, building a generative AI platform isn’t plug-and-play. Key challenges include:

Data quality and labeling: Garbage in, garbage out.

Latency in RAG systems: Slow response times affect UX.

Model hallucination: Even with context, LLMs can fabricate.

Scalability issues: From GPU costs to query limits.

Privacy & compliance: Especially in finance, healthcare, legal sectors.

What’s New in 2025?

Private LLMs: Enterprises increasingly train or fine-tune their own models (via platforms like MosaicML, Databricks).

Multi-Agent Systems: Agent networks are collaborating to perform tasks in parallel.

Guardrails and AI Policy Layers: Compliance-ready platforms with audit logs, content filters, and human approvals.

Auto-RAG Pipelines: Tools now auto-index and update knowledge bases without manual effort.

Conclusion

Generative AI platform development in 2025 is not just about building chatbots—it's about creating intelligent ecosystems that plug into your business, speak your data, and drive real ROI. With the right architecture, frameworks, and enterprise-grade controls, these platforms are becoming the new digital workforce.

0 notes

Text

A while back, I wanted to go deep into AI agents—how they work, how they make decisions, and how to build them. But every good resource seemed locked behind a paywall.

Then, I found a goldmine of free courses.

No fluff. No sales pitch. Just pure knowledge from the best in the game. Here’s what helped me (and might help you too):

1️⃣ HuggingFace – Covers theory, design, and hands-on practice with AI agent libraries like smolagents, LlamaIndex, and LangGraph.

2️⃣ LangGraph – Teaches AI agent debugging, multi-step reasoning, and search capabilities—straight from the experts at LangChain and Tavily.

3️⃣ LangChain – Focuses on LCEL (LangChain Expression Language) to build custom AI workflows faster.

4️⃣ crewAI – Shows how to create teams of AI agents that work together on complex tasks. Think of it as AI teamwork at scale.

5️⃣ Microsoft & Penn State – Introduces AutoGen, a framework for designing AI agents with roles, tools, and planning strategies.

6️⃣ Microsoft AI Agents Course – A 10-lesson deep dive into agent fundamentals, available in multiple languages.

7️⃣ Google’s AI Agents Course – Teaches multi-modal AI, API integrations, and real-world deployment using Gemini 1.5, Firebase, and Vertex AI.

If you’ve ever wanted to build AI agents that actually work in the real world, this list is all you need to start. No excuses. Just free learning.

Which of these courses are you diving into first? Let’s talk!

#ai#cizotechnology#innovation#mobileappdevelopment#appdevelopment#ios#techinnovation#app developers#iosapp#mobileapps#AI#MachineLearning#FreeLearning

0 notes

Text

AI Agent Development: A Complete Guide to Building Intelligent Autonomous Systems in 2025

In 2025, the world of artificial intelligence (AI) is no longer just about static algorithms or rule-based automation. The era of intelligent autonomous systems—AI agents that can perceive, reason, and act independently—is here. From virtual assistants that manage projects to AI agents that automate customer support, sales, and even coding, the possibilities are expanding at lightning speed.

This guide will walk you through everything you need to know about AI agent development in 2025—what it is, why it matters, how it works, and how to build intelligent, goal-driven agents that can drive real-world results for your business or project.

What Is an AI Agent?

An AI agent is a software entity capable of autonomous decision-making and action based on input from its environment. These agents can:

Perceive surroundings (input)

Analyze context using data and memory

Make decisions based on goals or rules

Execute tasks or respond intelligently

The key feature that sets AI agents apart from traditional automation is their autonomy—they don’t just follow a script; they reason, adapt, and even collaborate with humans or other agents.

Why AI Agents Matter in 2025

The rise of AI agents is being driven by major technological and business trends:

LLMs (Large Language Models) like GPT-4 and Claude now provide reasoning, summarization, and planning skills.

Multi-agent systems allow task delegation across specialized agents.

RAG (Retrieval-Augmented Generation) enhances agents with real-time, context-aware responses.

No-code/low-code tools make building agents more accessible.

Enterprise use cases are exploding in sectors like healthcare, finance, HR, logistics, and more.

📊 According to Gartner, by 2025, 80% of businesses will use AI agents in some form to enhance decision-making and productivity.

Core Components of an Intelligent AI Agent

To build a powerful AI agent, you need to architect it with the following components:

1. Perception (Input Layer)

This is how the agent collects data—text, voice, API input, or sensor data.

2. Memory and Context

Agents need persistent memory to reference prior interactions, goals, and environment state. Vector databases, Redis, and LangChain memory modules are popular choices.

3. Reasoning Engine

This is where LLMs come in—models like GPT-4, Claude, or Gemini help agents analyze data, make decisions, and solve problems.

4. Planning and Execution

Agents break down complex tasks into sub-tasks using tools like:

LangGraph for workflows

Auto-GPT / BabyAGI for autonomous loops

Function calling / Tool use for real-world interaction

5. Tools and Integrations

Agents often rely on external tools to act:

CRM systems (HubSpot, Salesforce)

Code execution (Python interpreters)

Browsers, email clients, APIs, and more

6. Feedback and Learning

Advanced agents use reinforcement learning or human feedback (RLHF) to improve their performance over time.

Tools and Frameworks to Build AI Agents

As of 2025, these tools and frameworks are leading the way:

LangChain: For chaining LLM operations and memory integration.

AutoGen by Microsoft: Supports collaborative multi-agent systems.

CrewAI: Focuses on structured agent collaboration.

OpenAgents: Open-source ecosystem for agent simulation.

Haystack, LlamaIndex, Weaviate: RAG and semantic search capabilities.

You can combine these with platforms like OpenAI, Anthropic, Google, or Mistral models based on your performance and budget requirements.

Step-by-Step Guide to AI Agent Development in 2025

Let’s break down the process of building a functional AI agent:

Step 1: Define the Agent’s Goal

What should the agent accomplish? Be specific. For example:

“Book meetings from customer emails”

“Generate product descriptions from images”

Step 2: Choose the Right LLM

Select a model based on needs:

GPT-4 or Claude for general intelligence

Gemini for multi-modal input

Local models (like Mistral or LLaMA 3) for privacy-sensitive use

Step 3: Add Tools and APIs

Enable the agent to act using:

Function calling / tool use

Plugin integrations

Web search, databases, messaging tools, etc.

Step 4: Build Reasoning + Memory Pipeline

Use LangChain, LangGraph, or AutoGen to:

Store memory

Chain reasoning steps

Handle retries, summarizations, etc.

Step 5: Test in a Controlled Sandbox

Run simulations before live deployment. Analyze how the agent handles edge cases, errors, and decision-making.

Step 6: Deploy and Monitor

Use tools like LangSmith or Weights & Biases for agent observability. Continuously improve the agent based on user feedback.

Key Challenges in AI Agent Development

While AI agents offer massive potential, they also come with risks:

Hallucinations: LLMs may generate false outputs.

Security: Tool use can be exploited if not sandboxed.

Autonomy Control: Balancing autonomy vs. user control is tricky.

Cost and Latency: LLM queries and tool usage may get expensive.

Mitigation strategies include:

Grounding responses using RAG

Setting execution boundaries

Rate-limiting and cost monitoring

AI Agent Use Cases Across Industries

Here’s how businesses are using AI agents in 2025:

🏥 Healthcare

Symptom triage agents

Medical document summarizers

Virtual health assistants

💼 HR & Recruitment

Resume shortlisting agents

Onboarding automation

Employee Q&A bots

📊 Finance

Financial report analysis

Portfolio recommendation agents

Compliance document review

🛒 E-commerce

Personalized shopping assistants

Dynamic pricing agents

Product categorization bots

📧 Customer Support

AI service desk agents

Multi-lingual chat assistants

Voice agents for call centers

What’s Next for AI Agents in 2025 and Beyond?

Expect rapid evolution in these areas:

Agentic operating systems (Autonomous workplace copilots)

Multi-modal agents (Image, voice, video + text)

Agent marketplaces (Buy and sell pre-trained agents)

On-device agents (Running LLMs locally for privacy)

We’re moving toward a future where every individual and organization may have their own personalized AI team—a set of agents working behind the scenes to get things done.

Final Thoughts

AI agent development in 2025 is not just a trend—it’s a paradigm shift. Whether you’re building for productivity, innovation, or scale, AI agents are unlocking a new level of intelligence and autonomy.

With the right tools, frameworks, and understanding, you can start creating your own intelligent systems today and stay ahead in the AI-driven future.

0 notes

Text

GitHub Copilot for Azure: New Tools Help AI App Development

GitHub Copilot for Azure

Azure at GitHub Universe: New resources to make developing AI apps easier

Microsoft Azure is to be part of this shift as a development firm that produces for other developers. Many of its employees will be at GitHub Universe to share their experiences and learn from others about how artificial intelligence is changing the way we work. I’m thrilled to share new features and resources incorporating Microsoft Azure AI services into your preferred development tools.

Microsoft’s AI-powered, end-to-end development platform, built on a solid community, seamlessly integrates Visual Studio (VS) Code, GitHub, and Azure to assist you in transforming your apps with AI. For more information, continue reading.

What is GitHub Copilot?

GitHub Copilot is an AI-powered coding assistant that makes it easier and faster for developers to create code. As developers type, it offers contextual aid by suggesting code, which could be a line completion or an entirely new block of code.

Code creation

Full lines and functions can be generated using Copilot. You can write code and have Copilot finish it or describe it in normal language.

Fixing errors

Copilot can remedy code or terminal errors.

Learning

Copilot can help you master a new programming language, framework, or code base.

Documentation

Copilot generates documentation comments.

A large language model (LLM) generates fresh content for Copilot by analyzing and processing massive volumes of data. It writes JavaScript, Python, Ruby, and TypeScript.

Copilot works in your IDE or command line. It’s also available on GitHub.com for enterprise users.

GitHub Copilot for Azure, your expert, is now available in preview

GitHub Copilot for Azure expands on the Copilot Chat features in Visual Studio Code to assist you in managing resources and deploying apps by connecting with programs you currently use, such as GitHub and Visual Studio Code. Without revealing your code, you can receive tailored instructions to learn about services and tools by using “@ azure.” Using Azure Developer CLI (azd) templates to provision and deploy Azure resources helps speed up and simplify development. Additionally, GitHub Copilot for Azure assists you with troubleshooting and answering inquiries regarding expenses and resources, allowing you to devote your time to your preferred activities while GitHub Copilot for Azure handles the rest.

Use AI App Templates to deploy in as little as five minutes

AI App Templates let you get started more quickly and streamline the review and production process, which speeds up your development. AI App Templates can be used directly in the development environment of your choice, including Visual Studio, VS Code, and GitHub Codespaces. Based on your AI use case or situation, GitHub Copilot for Azure can even suggest particular templates for you.

The templates, which offer a range of models, frameworks, programming languages, and solutions from well-known AI toolchain suppliers including Arize, LangChain, LlamaIndex, and Pinecone, most significantly give freedom and choice. You can start with app components and provision resources across Azure and partner services, or you can deploy entire apps at once. Additionally, the templates include suggestions for enhancing security, such as utilizing keyless authentication flows and Managed Identity.

Personalize and expand your AI applications

GitHub announced today that GitHub Models is currently in preview, bringing Azure AI’s top model selection straight to GitHub, enabling you to swiftly find, learn, and experiment with a variety of the newest, most sophisticated AI models. Expanding on that theme, you can now explore and utilize Azure AI models directly through GitHub Marketplace with the Azure AI model inference API. For free (use limits apply), compare model performance, experiment, and mix and match different models, including sophisticated proprietary and open models that serve a wide range of activities.

You can easily set up and connect in to your Azure account to grow from free token usage to premium endpoints with enterprise-level security and monitoring in production after you’ve chosen your model and are prepared to configure and deploy.

Use the GitHub Copilot upgrade assistant for Java to streamline Java Runtime updates

It can take a lot of time to keep your Java apps updated. The GitHub Copilot Java upgrade helper provides a method that uses artificial intelligence (AI) to streamline this procedure and enable you to update your Java apps with less manual labor. The GitHub Copilot upgrade assistant for Java, which is integrated into well-known programs like Visual Studio Code, creates an upgrade plan and walks you through the process of upgrading from an older Java runtime to a more recent version with optional dependencies and frameworks like JUnit and Spring Boot.

Using a dynamic build or repair loop, the assistant automatically resolves problems during the upgrade, leaving you to handle any remaining mistakes and apply changes as needed. By granting you complete control and enabling you to take advantage of improved AI automation at every stage, it guarantees transparency by giving you access to logs, code modifications, outputs, and information. After the upgrade is finished, you can quickly go over the comprehensive summary and check all code changes, which makes the process seamless and effective and frees you up to concentrate on creativity rather than tedious maintenance.

Use CI/CD workflows to scale AI apps with Azure AI evaluation and online A/B testing

You must be able to conduct A/B testing at scale and regularly assess your AI applications given trade-offs between cost, risk, and business impact. GitHub Actions, which can be easily included into GitHub’s current CI/CD workflows, are greatly streamlining this procedure. After changes are committed, you can use the Azure AI Evaluation SDK to compute metrics like coherence and fluency and perform automatic evaluation in your CI workflows. After a successful deployment, CD workflows automatically generate and analyze A/B tests using both custom and off-the-shelf AI model metrics. You can also interact with a GitHub Copilot for Azure plugin along the route, which facilitates testing, generates analytics, informs choices, and more.

You can trust Azure with your business, just as we trust it with ours

The platform you select is important as you investigate new AI possibilities for your company. Currently, 95% of Fortune 500 businesses rely on Azure for their operations. Azure is also used by its company to run programs like Dynamics 365, Bing, Copilots, Microsoft 365, and others. You have access to the same resources and tools that it use to create and manage Microsoft. Building with AI on Azure is made easier by its interaction with GitHub and Visual Studio Code. Additionally, Azure provides a dependable and secure basis for your AI projects with over 60 data center regions worldwide and a committed security staff. These are all excellent reasons to use Azure and GitHub to develop your next AI application.

GitHub Copilot pricing

There are various GitHub Copilot pricing tiers for people, businesses, and organizations:

Copilot individual

Individual developers, freelancers, students, and educators can pay $10 a month or $100 annually. It is free for verified students, instructors, and maintainers of well-known open source projects.

Business copilot

$19 per user per month for companies looking to enhance developer experience, code quality, and engineering velocity.

Your billing cycle can be switched from monthly to annual at any moment, or the other way around. The modification will become effective at the beginning of your subsequent payment cycle.

Read more on Govindhtech.com

#GitHub#Copilot#Azure#AIApp#GitHubCopilot#AI#AzureAI#AImodels#LLM#News#Technews#Technology#Technologytrends#govindhtech#Technologynews

0 notes

Text

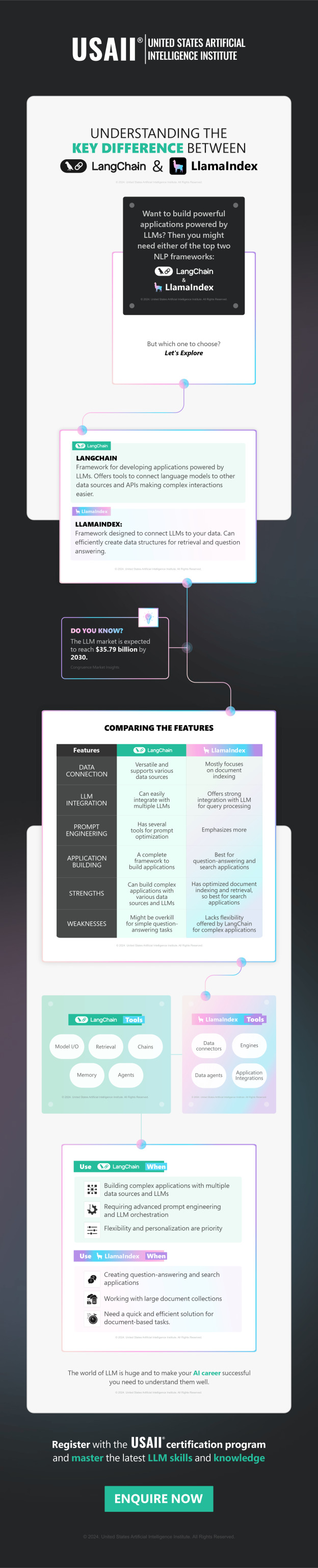

Understanding The Key Difference Between LangChain and LlamaIndex | USAII®

Understand the fine line of difference between popular LLM frameworks LangChain and LlamaIndex in this comprehensive infographic and advance your AI knowledge.

Read more: https://shorturl.at/aT8cA

LangChain, LlamaIndex, NLP applications, large language models, prompt engineering, LLM applications

0 notes

Link

Handling and retrieving information from various file types can be challenging. People often struggle with extracting content from PDFs and spreadsheets, especially when dealing with large volumes. This process can be time-consuming and inefficient, #AI #ML #Automation

0 notes

Text

Edge 330: Inside DSPy: Stanford Universitys LangChain Alternative

Check out the latest blog post on DSPy, a new alternative to language model programming frameworks like LangChain and LlamaIndex. It offers a fresh perspective and innovative features that have attracted attention in the LLM community. Learn more here: https://ift.tt/0JPwVAS List of Useful Links: AI Scrum Bot - ask about AI scrum and agile Our Telegram @itinai Twitter - @itinaicom

#itinai.com#AI#News#Edge 330: Inside DSPy: Stanford University’s LangChain Alternative#AI News#AI tools#Innovation#itinai#Jesus Rodriguez#LLM#Productivity#TheSequence Edge 330: Inside DSPy: Stanford University’s LangChain Alternative

0 notes

Text

AI Engineer CV: ingredients

📌 Key Insights: – AI Engineer Defined: A generalist well-versed in LLM frameworks, proficient at integrating it with private, multimodal data using the leanest infrastructure (like Vector DBs such as Deep Lake and tools like LangChain or LlamaIndex). All of this at optimal costs. – Soft Skills on the Rise: Today’s AI engineer needs UX aptitude, empathy, and a keen sense of domain knowledge. –…

View On WordPress

0 notes

Text

How Combining RAG with Streaming Databases Can Transform Real-Time Data Interaction

New Post has been published on https://thedigitalinsider.com/how-combining-rag-with-streaming-databases-can-transform-real-time-data-interaction/

How Combining RAG with Streaming Databases Can Transform Real-Time Data Interaction

While large language models (LLMs) like GPT-3 and Llama are impressive in their capabilities, they often need more information and more access to domain-specific data. Retrieval-augmented generation (RAG) solves these challenges by combining LLMs with information retrieval. This integration allows for smooth interactions with real-time data using natural language, leading to its growing popularity in various industries. However, as the demand for RAG increases, its dependence on static knowledge has become a significant limitation. This article will delve into this critical bottleneck and how merging RAG with data streams could unlock new applications in various domains.

How RAGs Redefine Interaction with Knowledge

Retrieval-Augmented Generation (RAG) combines large language models (LLMs) with information retrieval techniques. The key objective is to connect a model’s built-in knowledge with the vast and ever-growing information available in external databases and documents. Unlike traditional models that depend solely on pre-existing training data, RAG enables language models to access real-time external data repositories. This capability allows for generating contextually relevant and factually current responses.

When a user asks a question, RAG efficiently scans through relevant datasets or databases, retrieves the most pertinent information, and crafts a response based on the latest data. This dynamic functionality makes RAG more agile and accurate than models like GPT-3 or BERT, which rely on knowledge acquired during training that can quickly become outdated.

The ability to interact with external knowledge through natural language has made RAGs essential tools for businesses and individuals alike, especially in fields such as customer support, legal services, and academic research, where timely and accurate information is vital.

How RAG Works

Retrieval-augmented generation (RAG) operates in two key phases: retrieval and generation. In the first phase, retrieval, the model scans a knowledge base—such as a database, web documents, or a text corpus—to find relevant information that matches the input query. This process utilizes a vector database, which stores data as dense vector representations. These vectors are mathematical embeddings that capture the semantic meaning of documents or data. When a query is received, the model compares the vector representation of the query against those in the vector database to locate the most relevant documents or snippets efficiently.

Once the relevant information is identified, the generation phase begins. The language model processes the input query alongside the retrieved documents, integrating this external context to produce a response. This two-step approach is especially beneficial for tasks that demand real-time information updates, such as answering technical questions, summarizing current events, or addressing domain-specific inquiries.

The Challenges of Static RAGs

As AI development frameworks like LangChain and LlamaIndex simplify the creation of RAG systems, their industrial applications are rising. However, the increasing demand for RAGs has highlighted some limitations of traditional static models. These challenges mainly stem from the reliance on static data sources such as documents, PDFs, and fixed datasets. While static RAGs handle these types of information effectively, they often need help with dynamic or frequently changing data.

One significant limitation of static RAGs is their dependence on vector databases, which require complete re-indexing whenever updates occur. This process can significantly reduce efficiency, particularly when interacting with real-time or constantly evolving data. Although vector databases are adept at retrieving unstructured data through approximate search algorithms, they lack the ability to deal with SQL-based relational databases, which require querying structured, tabular data. This limitation presents a considerable challenge in sectors like finance and healthcare, where proprietary data is often developed through complex, structured pipelines over many years. Furthermore, the reliance on static data means that in fast-paced environments, the responses generated by static RAGs can quickly become outdated or irrelevant.

The Streaming Databases and RAGs

While traditional RAG systems rely on static databases, industries like finance, healthcare, and live news increasingly turn to stream databases for real-time data management. Unlike static databases, streaming databases continuously ingest and process information, ensuring updates are available instantly. This immediacy is crucial in fields where accuracy and timeliness matter, such as tracking stock market changes, monitoring patient health, or reporting breaking news. The event-driven nature of streaming databases allows fresh data to be accessed without the delays or inefficiencies of re-indexing, which is common in static systems.

However, the current ways of interacting with streaming databases still rely heavily on traditional querying methods, which can struggle to keep pace with the dynamic nature of real-time data. Manually querying streams or developing custom pipelines can be cumbersome, especially when vast data must be analyzed quickly. The lack of intelligent systems that can understand and generate insights from this continuous data flow highlights the need for innovation in real-time data interaction.

This situation creates an opportunity for a new era of AI-powered interaction, where RAG models seamlessly integrate with streaming databases. By combining RAG’s ability to generate responses with real-time knowledge, AI systems can retrieve the latest data and present it in a relevant and actionable way. Merging RAG with streaming databases could redefine how we handle dynamic information, offering businesses and individuals a more flexible, accurate, and efficient way to engage with ever-changing data. Imagine financial giants like Bloomberg using chatbots to perform real-time statistical analysis based on fresh market insights.

Use Cases

The integration of RAGs with data streams has the potential to transform various industries. Some of the notable use cases are:

Real-Time Financial Advisory Platforms: In the finance sector, integrating RAG and streaming databases can enable real-time advisory systems that offer immediate, data-driven insights into stock market movements, currency fluctuations, or investment opportunities. Investors could query these systems in natural language to receive up-to-the-minute analyses, helping them make informed decisions in rapidly changing environments.

Dynamic Healthcare Monitoring and Assistance: In healthcare, where real-time data is critical, the integration of RAG and streaming databases could redefine patient monitoring and diagnostics. Streaming databases would ingest patient data from wearables, sensors, or hospital records in real time. At the same time, RAG systems could generate personalized medical recommendations or alerts based on the most current information. For example, a doctor could ask an AI system for a patient’s latest vitals and receive real-time suggestions on possible interventions, considering historical records and immediate changes in the patient’s condition.

Live News Summarization and Analysis: News organizations often process vast amounts of data in real time. By combining RAG with streaming databases, journalists or readers could instantly access concise, real-time insights about news events, enhanced with the latest updates as they unfold. Such a system could quickly relate older information with live news feeds to generate context-aware narratives or insights about ongoing global events, offering timely, comprehensive coverage of dynamic situations like elections, natural disasters, or stock market crashes.

Live Sports Analytics: Sports analytics platforms can benefit from the convergence of RAG and streaming databases by offering real-time insights into ongoing games or tournaments. For example, a coach or analyst could query an AI system about a player’s performance during a live match, and the system would generate a report using historical data and real-time game statistics. This could enable sports teams to make informed decisions during games, such as adjusting strategies based on live data about player fatigue, opponent tactics, or game conditions.

The Bottom Line

While traditional RAG systems rely on static knowledge bases, their integration with streaming databases empowers businesses across various industries to harness the immediacy and accuracy of live data. From real-time financial advisories to dynamic healthcare monitoring and instant news analysis, this fusion enables more responsive, intelligent, and context-aware decision-making. The potential of RAG-powered systems to transform these sectors highlights the need for ongoing development and deployment to enable more agile and insightful data interactions.

#agile#ai#AI development#AI systems#AI-powered#alerts#Algorithms#analyses#Analysis#Analytics#applications#approach#Article#Artificial Intelligence#bases#BERT#Capture#challenge#chatbots#comprehensive#continuous#data#Data Management#Data Streams#data-driven#Database#databases#datasets#deal#deployment

1 note

·

View note